Data Alignment, Aggregation and Vectorisation (DAAV) BB – Design Document – Prometheus-X Components & Services

- Data Alignment, Aggregation and Vectorisation (DAAV) BB – Design Document

- Technical usage scenarios & Features

- Requirements

- Integrations

- Relevant Standards

- Input / Output Data

- Project interface

- Architecture

- Dynamic Behaviour

- Configuration and deployment settings

- Third Party Components & Licenses

- Implementation Details

- OpenAPI Specification

- Test specification

- Testing independent features

- Partners & roles

- Usage in the dataspace

Data Alignment, Aggregation and Vectorisation (DAAV) BB – Design Document

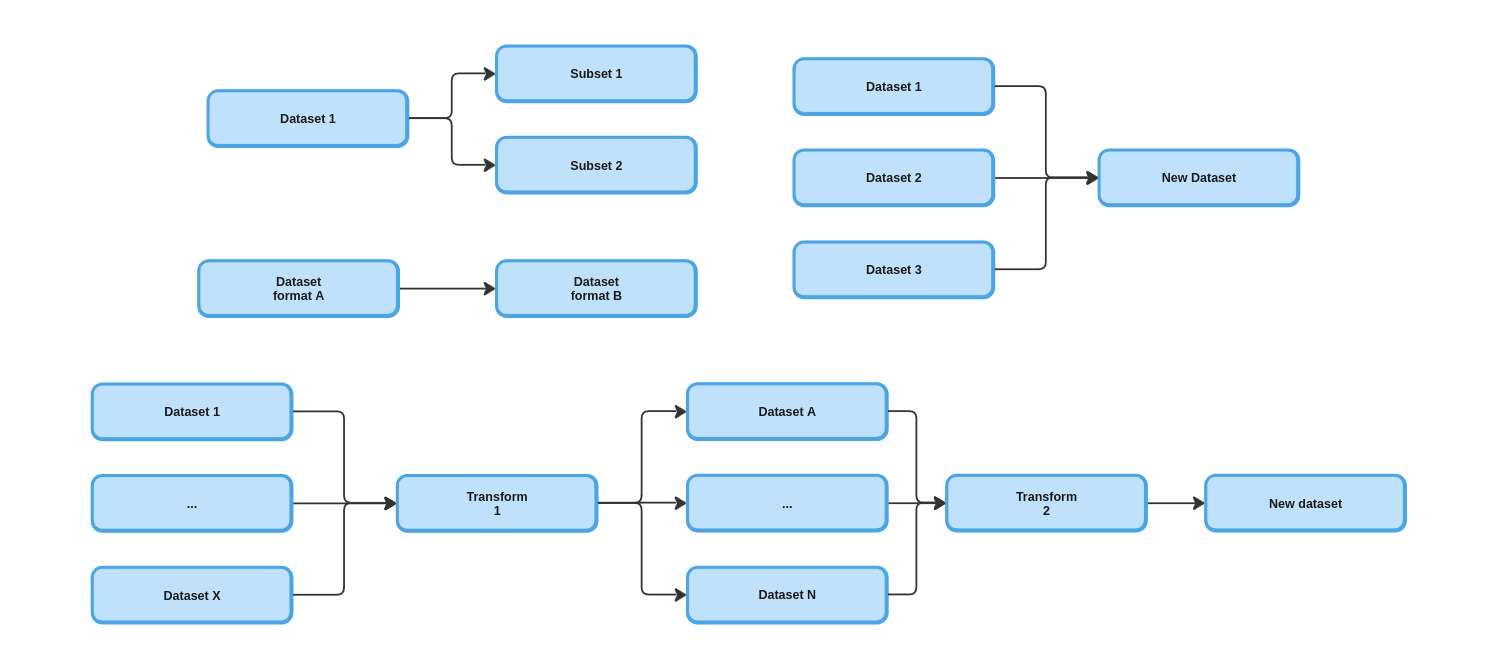

The DAAV building block purpose is to setup data manipulation pipeline to create new dataset by :

- combining data from various source

- filtering them to create subset

- converting them from one format to another

- calculating new data

The project is divided in two modules :

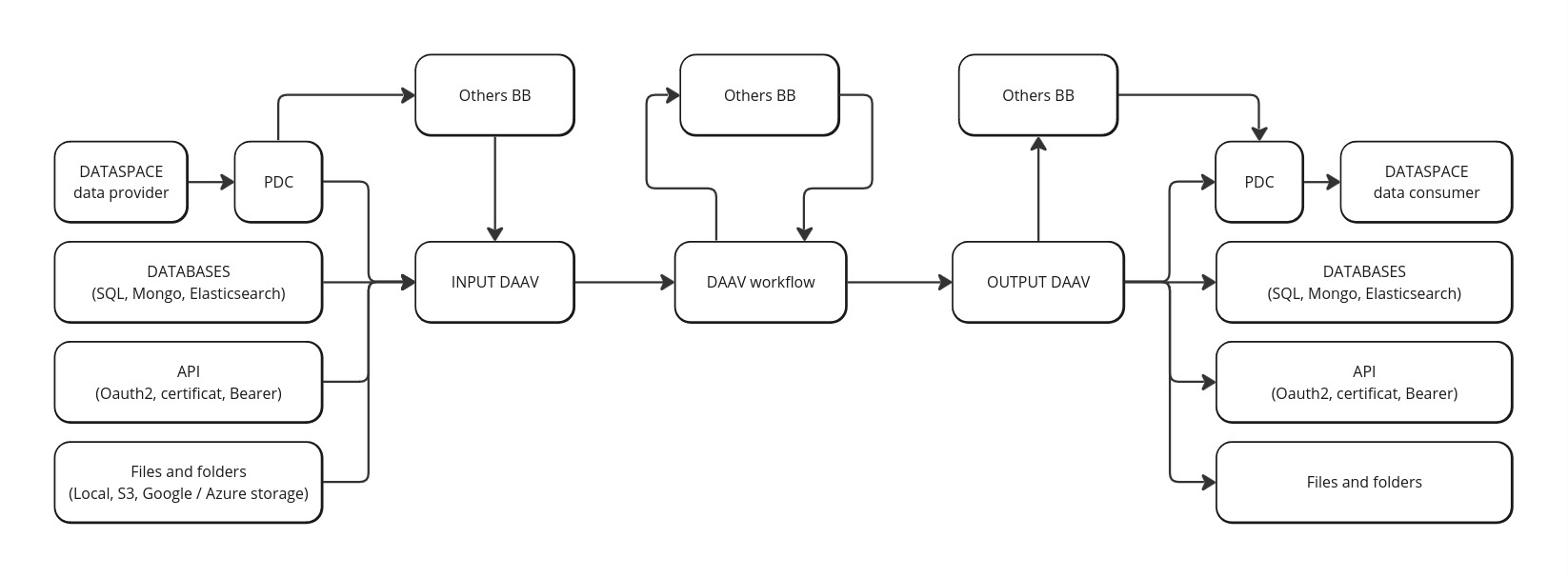

- The front-end offers a user interface to connect various data sources (Prometheus Data Connector, databases, files, API), visualize them and manipulate them (alignment, aggregation and vectorisation). The data manipulation use a visual programming system to create data manipulation pipelines by connecting blocks. Basic roles / organisations / users managment is available.

- The back-end provides API to manage front-end actions, to execute the data manipulation and to expose data.

Blocks are divided in three groups :

- Input block : Each block represent a dataset from Prometheus dataspace or external source.

- Transform block : Can manipulate and transform data.

- Output block : Can store the result and/or expose the new dataset on Prometheus dataspace.

Technical usage scenarios & Features

The first main objective of this building block is to reduce entry barriers for data providers and ai services clients by simplifying the handling of heterogeneous data and conversion to standard or service-specific formats.

The second main objective is to simplify and optimize data management and analysis in complex environments.

Features/main functionalities

Create a connector from any input data model to any output model. Examples :

- xApi custom model to xApi standardized model

- Research datasets (Mnist, commonvoice, etc…) to custom vector

- Custom skills framework to any framework

- Subset of main dataset

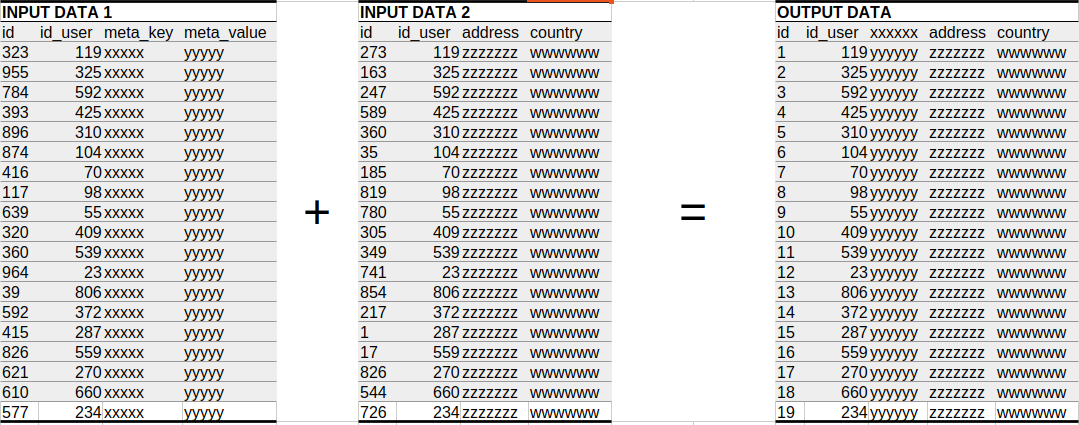

Create a aggregator from different data sources :

- link pseudonymised data with identification data for customer followup

- mix data of various subcontractors for global overview

Receive pushed data from PDC.

Tools to describe and explore datasets.

Expert system and AI automatic data alignment.

Technical usage scenarios

- Creation of a new dataset from different sources

- Aggregation of data from multiple sources ensuring data compatibility and standardization.This is useful for data analysts who often work with fragmented datasets.

- Providing mechanism to transform and standardize data formats, such as converting xAPI data to a standardized xAPI format.

- Merging datasets with different competency frameworks to create a unified model.

- Optimize data manipulation and freshness based on user’s needs.

- Give access to specific data based on user rights (contracts and organisation roles)

- Paul is CMO and wants to follow KPIs on each products of his entreprise. He was access to specific data prepared by his IT team

- Arsen is fullstack developper and wants to verify integrity of data generated by his code. He configures his own data to this purpose.

- Christophe is an AI developer and wants to prepare his data for training. He create pipelines with continuous integration.

- Fatim is a data analyst and has been given access to a dataset to calculate specific metrics : mean, quantile, min, max, etc.

- Manage inputs and outputs for others building blocks or AI services.

- Prepare data for Distributed Data Visualization.

- Prepare input and receive (and store) output for Data Veracity and Edge translators.

- Create subsets of a main dataset to test performance of an AI service on each set.

- Front-end : User can retrieve Prometheus-X dataspace ressource or add external ressource and build a transformation workflow from it.

- Back-end : Expose a public endpoint to execute transformation workflow or to get data ; Script-oriented automation ;

- Database : Internal database of the building block to store user ressource location and workflow.

Requirements

BB must communicate with catalog API to retrieve contract.

BB must communicate with pdc to trigger data exchange with pdc.

BB MUST communicate to PDC to get data from contract and consent BB.

BB CAN receive data pushed by PDC.

BB CAN connect to others BB.

BB MUST expose endpoints to communicate with others BB.

BB SHOULD be able to process any type of data as inputs

Expected requests time :

| Type | Requests |

|---|---|

| Simple request | < 100ms |

| Medium request | < 3000ms |

| Large requests | < 10000ms |

Integrations

Direct Integrations with Other BBs

No other building block interacting with this building block requires specific integration.

Relevant Standards

Data Format Standards

JSON - CSV - NoSQL (mongo, elasticsearch) - SQL - xAPI - Parquet - Archive (tar, zip, 7z, rar).

Mapping to Data Space Reference Architecture Models

DSSC :

- Data Interoperability / Data Exchange: capabilities relating to the actual exchange and sharing of data.

- Data Sovereignty and Trust /

- Data Value Creation Enablers /

- Data, Services and Offering Descriptions: this building block provides data providers with the tools to describe data, services and offerings appropriately, thoroughly, and in a manner that will be understandable by any participant in the data space. It also includes related data policies and how they can be obtained.

- Publication and Discovery: this building block allows data providers to publish the description of their data, services and offerings so that they can be discovered by future potential users, following the FAIR (Findable, Accessible, Interoperable and Reusable) principles as much as possible.

- Value-Added Services: this building block complements the data space enabling services by providing additional services to create value on top of data transactions. These services are structured capabilities to facilitate certain tasks, processes, or functions that these users need, directly or indirectly, for their operations.

*IDS RAM* 4.3.3 Data as an Economic Good

Input / Output Data

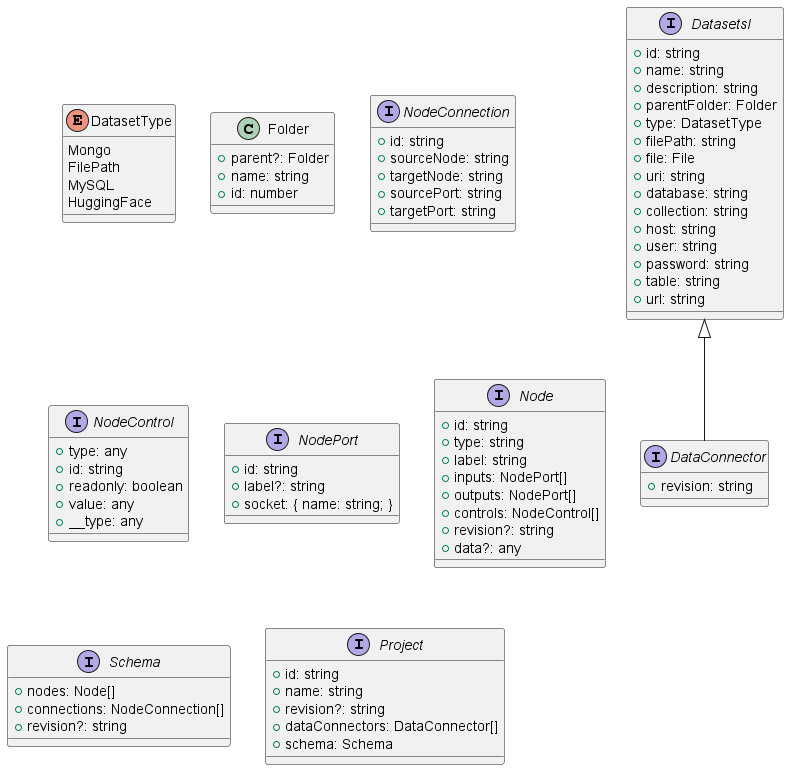

Project interface

Project interface handle all requires information for a DAAV project. The front-end can import and export json content who follows this structure.

The back-end can execute the workflow describe in this structure. Inside we have Data Connectors required to connect to a datasource.

A workflow is represented by nodes (Node) with input and output (NodePort) who can be connected. All nodes are divides in three group :

- Input Node for data sources.

- Transform node to manipulate data.

- Output node to export newly created data.

This may be for a complete run, or simply to test a single node in the chain to ensure hierarchical dependency between connected nodes.

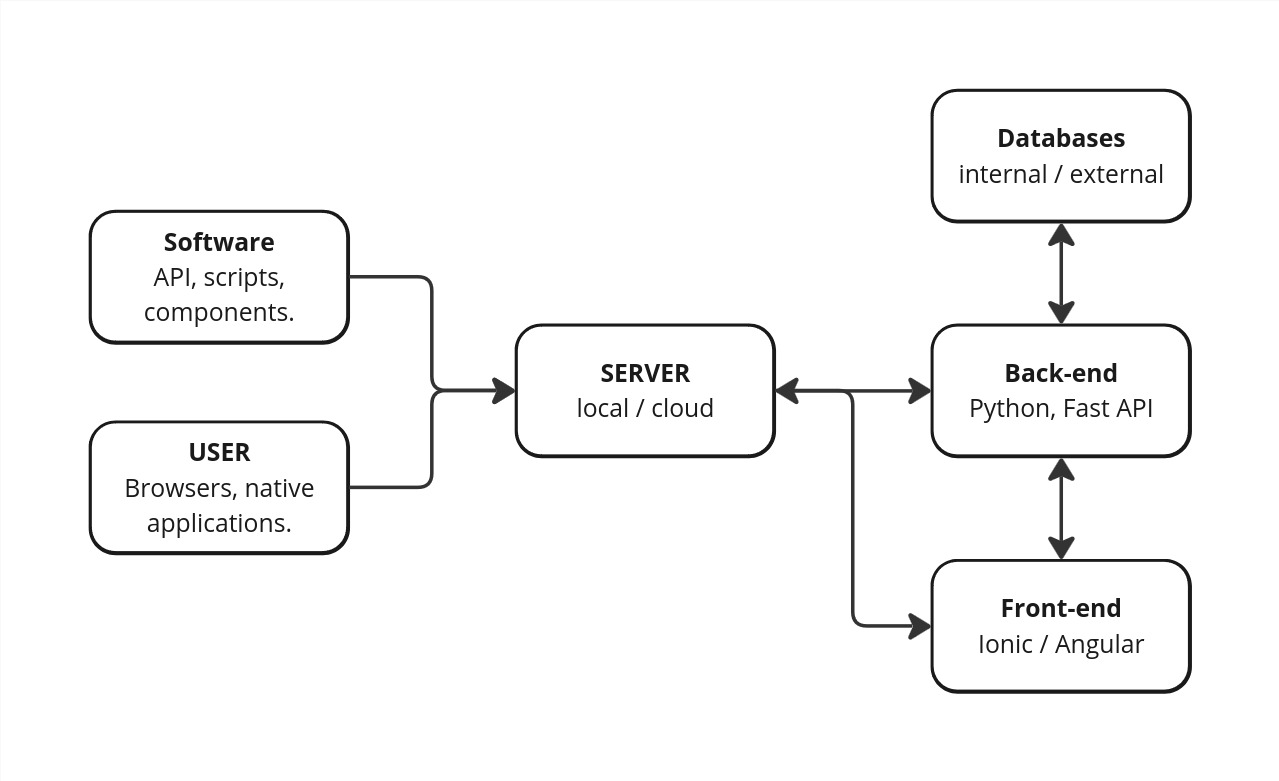

Architecture

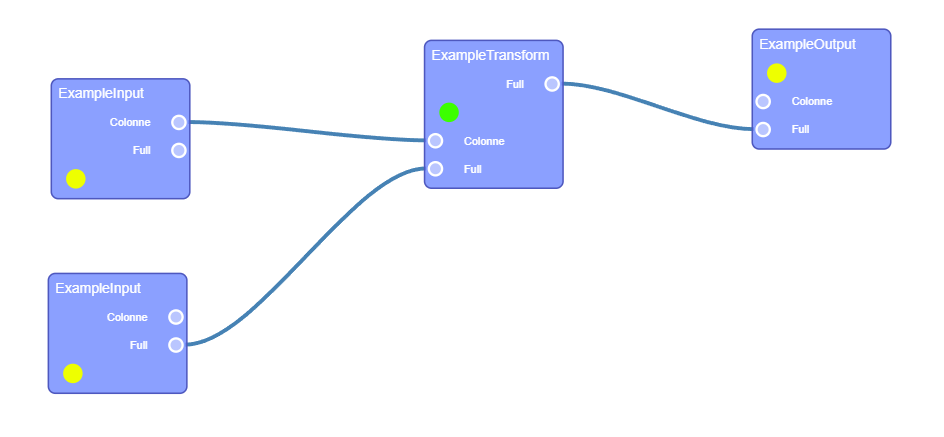

Front-end worflow editor class diagram

This diagram describes the basic architecture of the front-end, whose purpose is to model a set of tools for the user, where he can build a processing chain.

This is based on Rete.js, a framework for creating processing-oriented node-based editors.

A workflow is a group of nodes connected by ports (input/output) each ports have a socket type who define the data format which can go through and so define connection rules between node.

Example of a node base editor with nodes and inside inputs and/or output and a colored indicator to visualize its status.

Back-end workflow class diagram

The back-end class, which reconstructs a representation of the defined workflow and executes the processing chain, taking dependencies into account.

For each node, we know its type, and therefore its associated processing, as well as its inputs and outputs, and the internal attributes defined by the user via the interface.

Dynamic Behaviour

The sequence diagram shows how the component communicates with other components.

---

title: Sequence Diagram Example (Connector Data Exchange)

---

sequenceDiagram

participant i1 as Input Data Block (Data Source)

participant ddvcon as PDC

participant con as Contract Service

participant cons as Consent Service

participant dpcon as Data Provider Connector

participant dp as Participant (Data Provider)

participant i2 as Transformer Block

participant i3 as Merge Block

participant i4 as Output Data Block

participant enduser as End User

i1 -) ddvcon:: Trigger consent-driven data exchange<br>BY USING CONSENT

ddvcon -) cons: Verify consent validity

cons -) con: Verify contract signature & status

con --) cons: Contract verified

cons -) ddvcon: Consent verified

ddvcon -) con: Verify contract & policies

con --) ddvcon: Verified contract

ddvcon -) dpcon: Data request + contract + consent

dpcon -) dp: GET data

dp --) dpcon: Data

dpcon --) ddvcon: Data

ddvcon --) i1: Data

i1 -) i2: Provide data connection or data

Note over i2 : setup of transformation

i2 -) i3: Provide data

Note over i3 : setup merge with another data source

i3 -) i4: Provide data

Note over i4 : new data is available

enduser -) i4: Read file directly from local filesystem

enduser -) i4: Read file through SFTP protocol

enduser -) i4: Read data through REST API

enduser -) i4: Read data through database connector

---

title: Node status - on create or update

---

stateDiagram-v2

classDef Incomplete fill:yellow

classDef Complete fill:orange

classDef Valid fill:green

classDef Error fill:red

[*] --> Incomplete

Incomplete --> Complete: parameters are valid

state fork_state <<choice>>

Complete --> fork_state : Backend execution

fork_state --> Valid

fork_state --> Error

#state fork_state2 <<choice>>

#Error --> fork_state2 : User modify connection/parameter

#fork_state2 --> Complete

#fork_state2 --> Incomplete

class Incomplete Incomplete

class Complete Complete

class Valid Valid

class Error Error

Backend Node Execute : Node mother class function "execute" Each child class have its function "Process" with specific treatment.

Inside a workflow a recursive pattern propagate the execution following parents nodes.

---

title: Backend - Node class function "Execute"

---

stateDiagram-v2

classDef Incomplete fill:yellow

classDef Complete fill:orange

classDef Valid fill:green

classDef Error fill:red

state EachInput {

[*] --> ParentNodeStatus

ParentNodeStatus --> ParentNodeValid

ParentNodeStatus --> ParentNodeComplete

ParentNodeStatus --> ParentNodeIncomplete

ParentNodeIncomplete --> [*]

ParentNodeValid --> [*]

ParentNodeComplete --> ParentNodeStatus : Parent Node function "Execute"

ParentNodeStatus --> ParentNodeError

ParentNodeError --> [*]

}

[*] --> NodeStatus

NodeStatus --> Complete

NodeStatus --> Incomplete

Incomplete --> [*] : Abort

Complete --> EachInput

state if_state3 <<choice>>

EachInput --> if_state3 : Aggregate Result

if_state3 --> SomeInputIncomplete

if_state3 --> AllInputValid

if_state3 --> SomeInputError

SomeInputIncomplete --> [*] : Abort

AllInputValid --> ProcessNode: function "Process"

SomeInputError --> [*] : Abort

ProcessNode --> Error

ProcessNode --> Valid

Valid --> [*] :Success

Error --> [*] :Error

class Incomplete Incomplete

class SomeInputIncomplete Incomplete

class ParentNodeIncomplete Incomplete

class ParentNodeComplete Complete

class Complete Complete

class Valid Valid

class AllInputValid Valid

class ParentNodeValid Valid

class Error Error

class SomeInputError Error

class ParentNodeError Error

Configuration and deployment settings

Various configuration example :

**.env file :**

MONGO_URI = ''

SQL_HOST = ''

LOG_FILE = "logs/log.txt"

...

**secrets folder (openssl password generated) :**

- secrets/db_root_password

- secrets/elasticsearch_admin

**angular environments :**

- production: true / false

- version : X.X

**python fast api backend config.ini :**

[dev]

DEBUG=True/False

[edge]

master:True/False

cluster=014

What are the limits in terms of usage (e.g. number of requests, size of dataset, etc.)?

# work in progress

Third Party Components & Licenses

- retejs MIT license

- ionic/angular/material MIT license

- FastAPI MIT license

- DuckDB MIT license

- PyArrows Apache License

Implementation Details

Nodes implementation :

All nodes have a front-end implementation where the user can setup its configuration and a back-end implementation to execute the process.

All nodes inherit from the abstract class Nodeblock.

We identify shared functionalities like :

- Front-end : a status component to track the node status with a visual indicator.

- Front-end : a data method to save the current node configuration with user modification.

- Front-end : a run button to execute the node.

- Back-end : An execute function to verify parent status and executed them if possible to finally call her inner logic.

- Back-end : An abstract process function with custom business logic

Inputs Node :

All input nodes inherit from InputDataBlock :

We identify shared functionalities like :

- Front-end : button to test the connection with the data-source with a visual indicator.

- Back-end : An abstract test connection function.

We need one class of input Node for each data-source format (Local file, MySQL, Mongo , API, ...)

For the front-end a factory deduces the correct input instance according to the given source connector sent in parameter.

Node instance exposes one output and its socket type define its format :

- Flat object : Table of data with several columns. Examples : CSV, MySQL results

- Deep object : Collection of data with inner objects.

- xAPI : Deep object who follow xAPI specification.

Connections rules between nodes

Each block input defines a socket, ie. what kind of data it accepts (float, string, array of string, etc…).

On the back-end counterpart class :

“Process” function implement the business logic. In this case, it retrieves data-source information and populate the output with data schemes and data content.

For huge data content we can use a parquet format to store intermediate result physically, likely as a selector shared attribute of InputDataBlock

Each input Node may have rules executed at the request level and a widget in the front-end to setup them.

Outputs Node :

All output nodes inherit from OutputDataBlock.

We need one class of output Node for each format handle by the BB.

Each output block specifies the inputs it can process.

The widgets’ block permits to setup the output and visualizes its status.

On the back-end counterpart class :

One method to execute the node and export a file or launch transactions to fill the destination.

Transform Node :

All nodes called upon to manipulate data inherit from the transformation node.

Transform node can dynamically add input or output based on data manipulated and use widgets to expose parameters for the user.

For complex cases, we can imagine a additional modal window to visualize a sample of the data and provide a convenient interface for describing the desired relationships between data.

Considerations on revision identifiers

The workflow representation can be likened to a tree representation, where each node can have a unique revision ID representing its contents and children.

With this we can have a convenient pattern to compare and identify modifications between two calls on back-end API.

If we use intermediate results with physical records such as parquet files, for example, we can avoid certain processing when a node retains the same revision ID between two calls, and reconstruct only those parts where the user has made changes.

By keeping track of the last revision of the root of a front-end and back-end call, it is also possible to detect desynchronization when several instances of a project are open and so, make the user aware of the risks of overwriting.

OpenAPI Specification

The back-end will expose a swagger OpenAPI description.

For all entity describes we will follow a REST API (Create / Update / Delete).

- User entity represent the end user

- Credential entity represent Auth system linked to user.

- DataConnector represent data-source location

- Project entity represent end user workflow

API output data blocks (GET)

- Expose data from all output data blocks defined.

And around the project all business action :

- Execute a workflow

- Execute a node

- Get input / output data schemes from a node

- Get input / output data example from a node

- ...

Test specification

Internal unit tests

Front-end:

- Karma for test execution

- Jasmine for unit test.

Unit test for all core class function :

- Board render.

- Board tools.

- Import project

- Export project

- Node Factory

- Socket rules interaction between connectable items.

- Revision

Back-end :

- Pytest

Unit test for all endpoints and core class function.

Core class :

- Node : Process (each child class specific work)

- Node : Execute

- Workflow : Import/Update workflow

- Workflow : Execute

API endpoints :

- Auth

- Create update delete User and credential

- Create update delete DataConnector

- Create Load Save Delete workflow

- Load and execute workflow

- Node Retrieve input/ouput example and data scheme ( frontend visualization)

- Node Test/Execute Node

Component-level testing

Charge Test with K6 to evaluate API performance.

UI test

Front end : Selenium to test custom node usage deploy and interaction with custom parameter.

Testing independent features

Manual tests to prove individual functionalities.

Backend Health Check: Verify backend service availability

Objective: Ensure the DAAV backend service is running and responding correctly.

Precondition: DAAV backend service is deployed and accessible.

Steps:

- Send a GET request to the backend by default for docker instance : http://localhost:8081/health

- Verify the response status code is 200

- Verify the response body contains the expected JSON

Expected Result:

{

"status": "healthy",

"app_name": "DAAV Backend API",

"environment": "production",

"version": "2.0.0"

}

Result: Validated.

Test Case 1: Verify dataset creation

Objective: Ensure datasets can be created with different input types.

Precondition: DAAV interface is accessible and functional.

Steps:

- Access DAAV interface

- Click on "Add Dataset"

- Select "File" as type

- Upload a valid JSON file

- Enter a name for the dataset

- Confirm creation

Expected Result: Dataset is created and appears in the list.

Result: Validated.

Test Case 2: Verify workflow creation

Objective: Ensure a workflow can be created and saved.

Precondition: At least one dataset exists.

Steps:

- Navigate to "Transformation" tab

- Click on "New Workflow"

- Drag and drop a DataFileBlock

- Configure the block by selecting the previously created dataset

- Add a transformation drag and drop FlattenTransfrom

- Add an output drag and drop FileOuput

- Configure the block by adding a failename

- Connect the blocks

- Save the workflow

Expected Result: Workflow is saved with a unique ID.

Result: Validated.

Test Case 3: Verify workflow execution

Objective: Ensure a workflow can be executed.

Precondition: A valid workflow exists.

Steps:

- Select a saved workflow

- Click the play button

- Observe execution

Expected Result: Workflow executes without error and produces expected result.

Result: Validated.

Partners & roles

Enumerate all partner organizations and specify their roles in the development and planned operation of this component. Do this at a level which a)can be made public, b)supports the understanding of the concrete technical contributions (instead of "participates in development" specify the functionality, added value, etc.)

Profenpoche (BB leader):

- Organize workshops

- Develop backend of DAAV

- Develop frontend of DAAV

Inokufu :

- BB validation

BME, cabrilog and Ikigaï are also partners available for beta-testing.

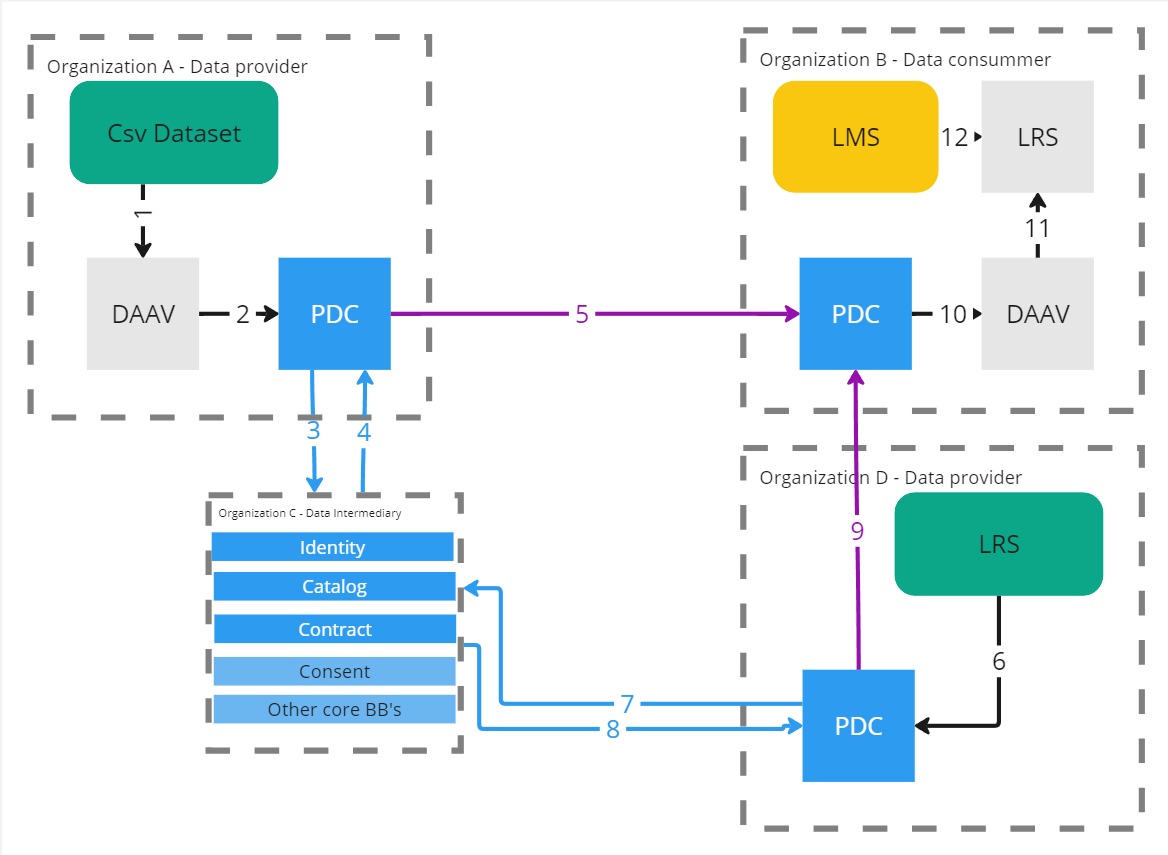

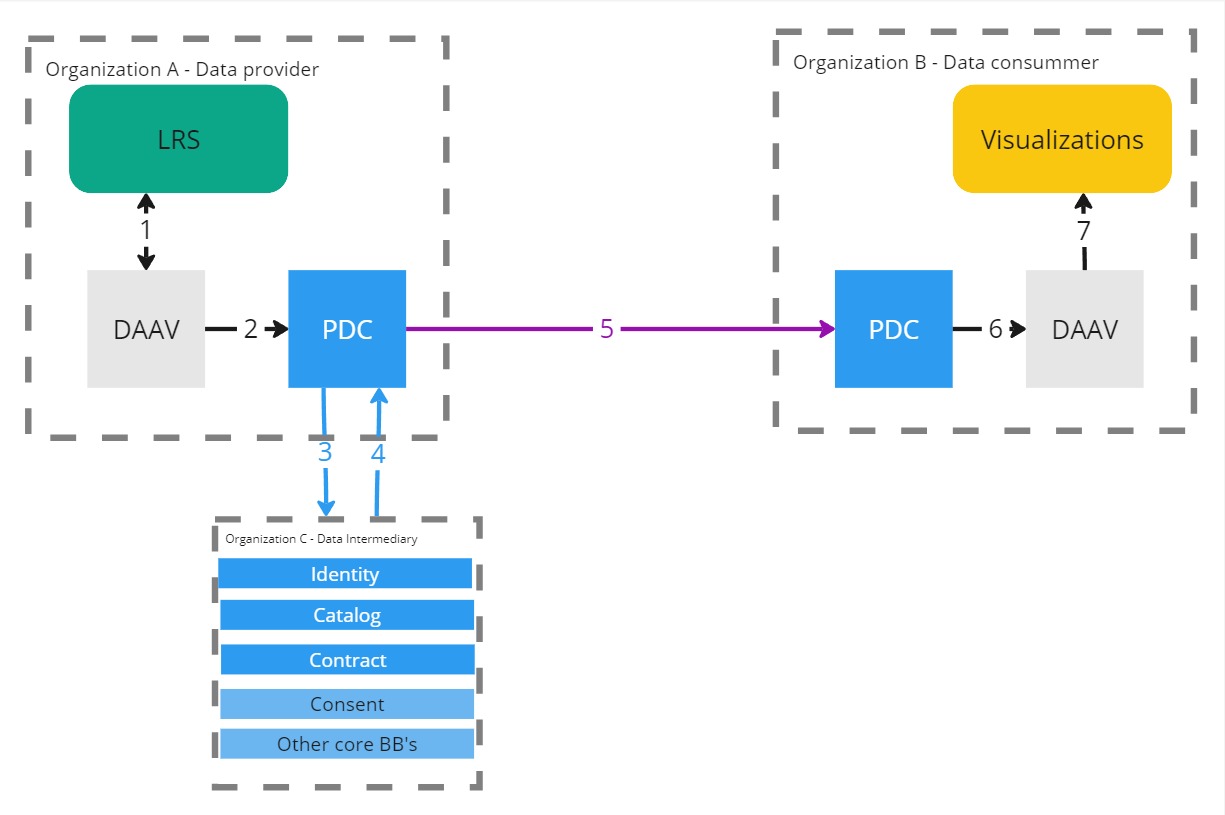

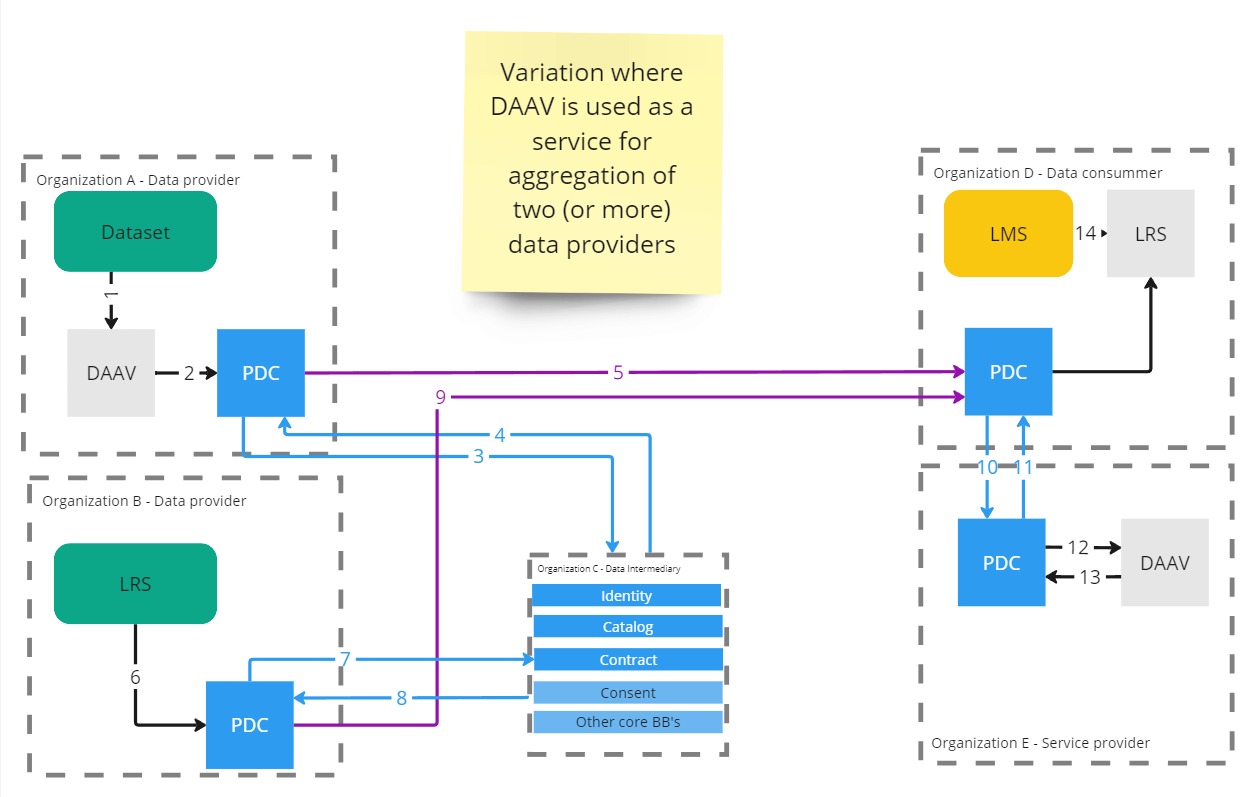

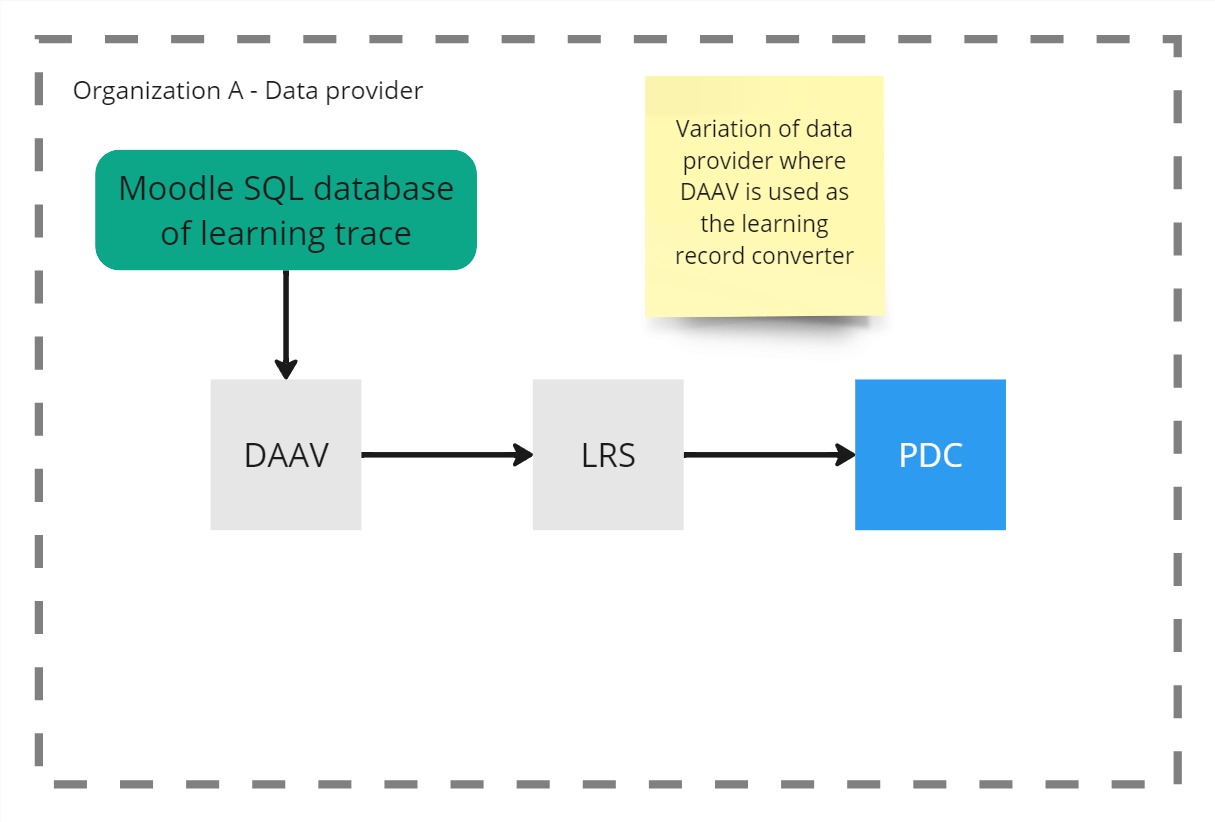

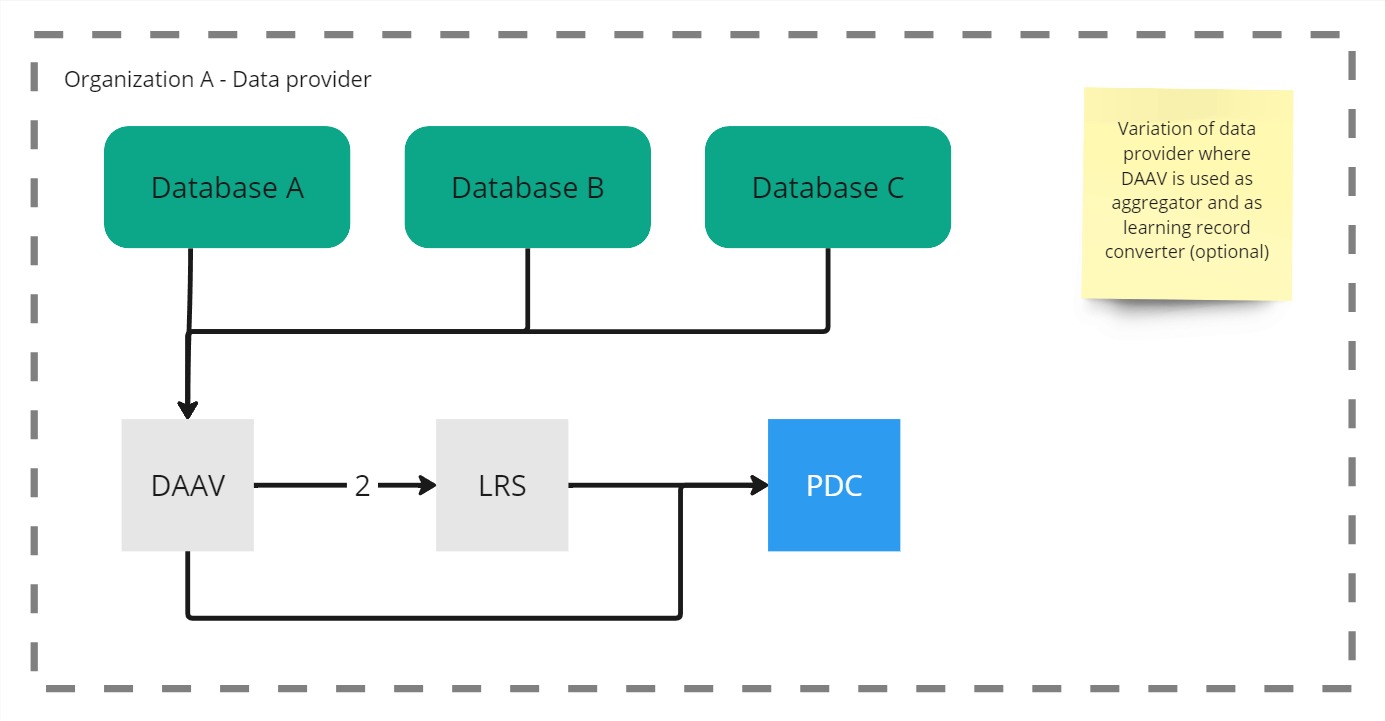

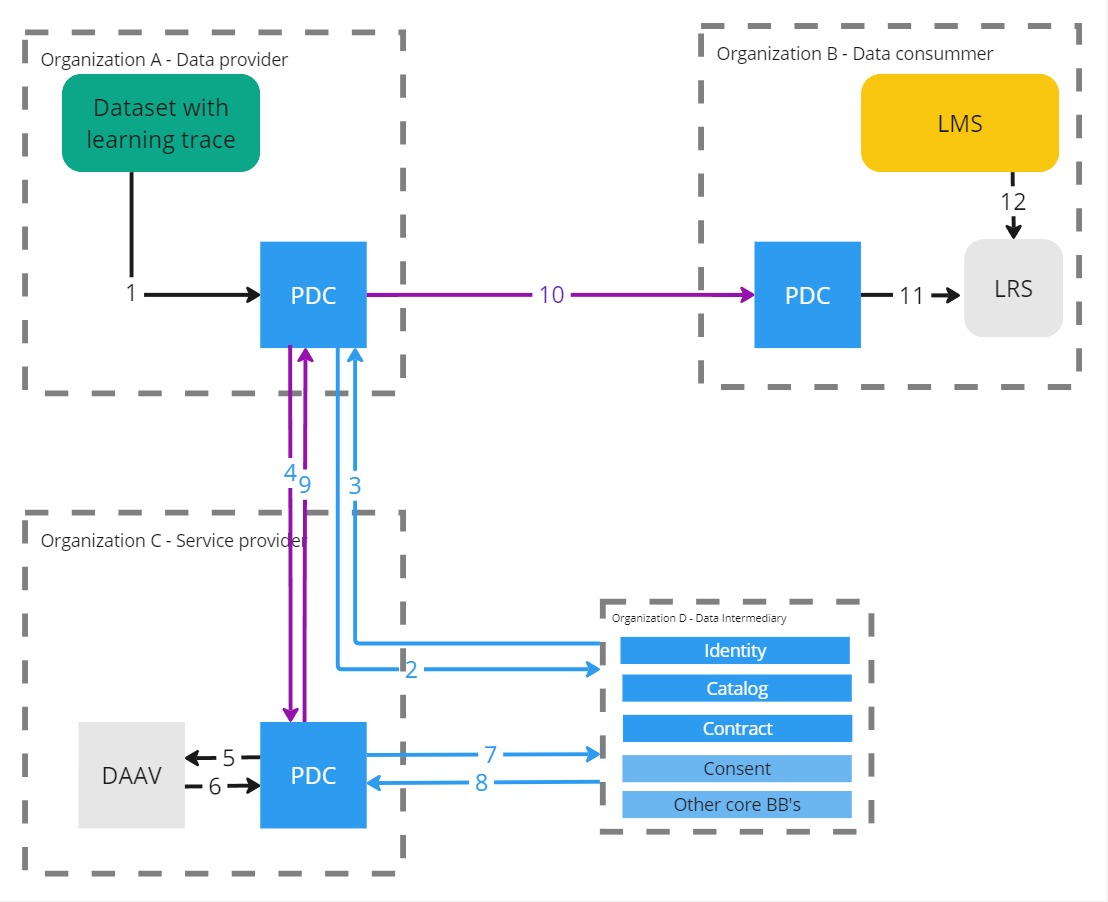

Usage in the dataspace

Specify the Dataspace Enalbing Service Chain in which the BB will be used. This assumes that during development the block (lead) follows the service chain, contributes to this detailed design and implements the block to meet the integration requirements of the chain.

The Daav building block can be used as a data transformer to build new datasets from local data or from prometheus dataspace.

The output can also be share on prometheus dataspace.

Example 1

Example 2

Example 2

Example 3

Example 3

Example 4

Example 4

Example 5

Example 5

Example 6

Example 6